🔙 Backfilling 50 million records, quickly

Over the last few months at carwow, a small team have reworked the way in which we process and propagate pricing changes made by dealerships, manufacturers, and governments, and the user-facing quotes which depend on them.

The database table which holds user-facing quotes (known as the

factory_order_quotes table) is one of the largest, oldest, and most-used at

carwow. Part of the work we had to carry out involved running numerous

“backfills” in order to migrate specific enum values for the status column.

The data the we needed in order to know how to migrate those records came from

three different databases accessible only feasibly by making multiple internal

HTTP API calls.

We needed to come up with a solution that allowed us to update ~50 million records, relying on data coming from three external sources, quickly, safely, with zero-downtime, and without significant impact on user experience and other production systems. And we did! 😄

How did we do it?

The major obstacle that we faced was being able to have access to the data we

needed in order to decide which new status a record should be moved to. Running

additional requests or queries to fetch that data live as we were backfilling

was out of the question: it would introduce significant overhead slowing down

the backfill (we were a bit impatient) and also introduce a significant amount

of additional load on our other applications.

One important attribute of this backfill was that we had already done the work

to update the live logic to update the status column appropriately, so only old

records existed to be migrated. We also knew that the ‘destination’ status for

any given record we wanted to backfill was stable, and wouldn’t change based on

when we ran the migration. This was a key property of the backfill we were

running. Knowing this meant that we could precalculate all of the records that

needed to be moved to a new status and what that status was. And best of all,

we could calculate this mapping using our data warehouse, bringing the impact

to users and production systems to zero. 🥳

This also allowed us to remove the need for cross-application API requests and

remove the need for additional database queries on the host applications, we

could instead run only UPDATE queries, reducing the amount of work we had to do

‘live’ by orders of magnitude!

So what did this process look like?

- Run a query on Snowflake (our data warehouse) to pull in all of the required data from databases powering several distinct production applications

- Dump the data into a temporary S3 bucket, split per 10k rows

- In a background job, download the file, load the IDs and run an

UPDATE

You can see the script used to run the query, split, and upload to S3 here.

The splitting of files and enqueuing as background jobs was important. It meant that we could easily and quickly stop and start the backfill if we needed to (if the database was on fire) in a recoverable manner. We basically had ‘checkpoints’ at every 10k records. It also meant that we could run multiple streams of jobs, each operating on a specific file or sequence of files.

If you are curious, the implementation used to run these jobs looked a little (a lot) like this.

Summary

The method we used allowed us to update ~48.7 million records (twice actually, because we replicate this particular table to a different database) in just under 6 hours! This is mostly due to removing the need for multiple batched reads from the database and API calls to other services before updates. Instead, we did this all those reads pre-emptively outside of our production environment, allowing us to more or less consistently pump updates to the database.

There are a few important caveats which should be taken into account before attempting a similar approach:

- Make sure that all the records in the initial set fetched from your warehouse will always remain within that set (if more records could potentially get added, that’s fine) i.e. the set of records you want to migrate should be fixed at the point you generate the mapping from your data warehouse (if it is possible for records to be removed from that set, this method is not directly applicable!).

- You may need to throttle between writing runs, maybe adding some breathing period between enqueuing subsequent jobs to allow for propagation of writes to following databases.

- Make sure that the writes you are making would not usually also trigger some other events (jobs, event publishing, etc.).

- If there are some events that need to occur or may automatically be triggered, make sure that downstream services are capable of handling the additional load incurred by a backfill, or whether another solution is required (we had to run a secondary backfill on another of our applications following this one).

- Make sure you have good monitoring and observability around your system to make sure it is coping well — it’s good to know when to give your system a break; before it breaks 😅

- We found significant performance improvements in our backfill runs when

ordering the dumped set of IDs we wanted to update in order (i.e.

ORDER BY id). I assume this is because it causes less thrashing (page faulting) of memory on the database, although I couldn’t find documentation to support this. YMMV.

Overall we found this method to be a great improvement on previous backfilling strategies we had developed, and have since used it multiple times (on the same table, and others) to enable us to evolve our data models as the business grows, requirements change, and we hit scaling issues.

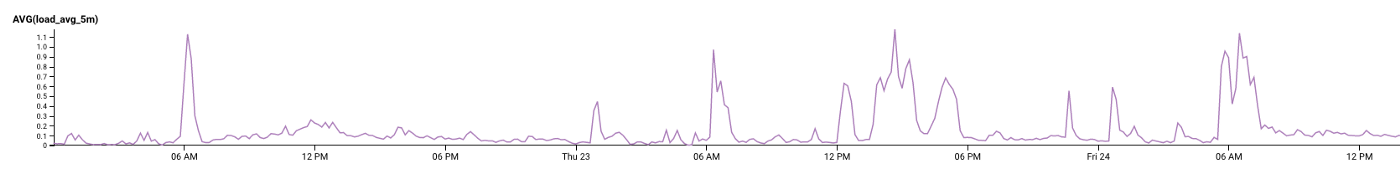

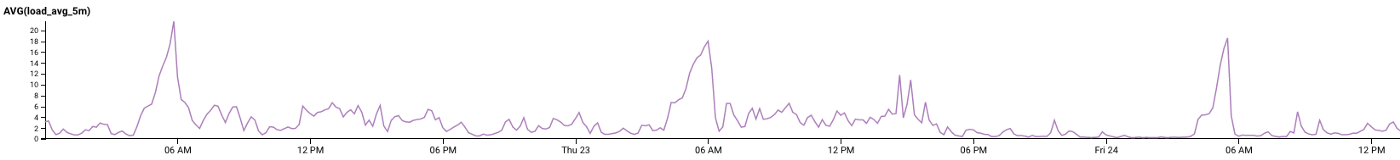

There were some minor issues (which you may have deduced from the graphs above) related to database load that we had to keep a close eye on and stop our backfill for a little bit in order to allow our following/replica databases to catch up on commits. Thanks to the idempotent nature of the jobs we ran and the monitoring we had available to us we could proactively pause the backfill before having any impact on user-facing services.

A graph of 5-minute load average on our leader database — updates took place between 12 pm and 6 pm on the 23rd

A graph of 5-minute load average on our follower database — updates took place between 12 pm and 6 pm on the 23rd

Thanks a lot for taking the time to read this. I hope that perhaps you or your team might find the method above applicable in helping to facilitate the evolution of your company’s data model as your project grows and the requirements (functional and non-functional) change! If you have any questions or feedback feel free to ping me in the comments or on twitter @CGA1123

Until next time! 👋

This post was originally published on “carwow Product, Design & Engineering” on Medium (source)