🌒 Shadow requesting for great good

Encouragingly, traffic to the carwow applications has grown significantly over the last couple of years, and of course we hope this trend continues. Our Software Engineering Tools & Infrastructure (SETI 👽) Team has the task of ensuring that our applications, infrastructure, and tooling continue evolving to support the business as it grows.

Recently, SETI has been investigating how our applications perform under significant increases in traffic. One of the methods we’ve used is commonly known shadow requesting, and it’s been useful enough to us that I’d like to share it here.

So what is shadow requesting?

At its core, shadow requesting means replaying live requests shortly after they have completed. Either to the same host (in order to simulate additional load), or to a different host (to verify parity of responses).

Why shadow requests?

One of the benefits of load testing via shadow requests is that we are simulating real traffic patterns without any configuration overhead; by replaying the requests almost in real-time we create a lifelike scenario of additional users on our systems without needing to maintain a repository of user flows and their associated HTTP requests.

How are we doing this?

We developed a tool, umbra, to facilitate shadow-requesting. It works as a Rack

middleware, which sends incoming requests, via a Thread Queue, to a Redis

pub/sub channel. In a separate process (or processes), a subscriber listens to

that same channel and replays the request.

I’ve added the current umbra configuration for one of our applications in

this gist.

What do we need to watch out for?

We currently configure umbra only to shadow idempotent requests. As a general

rule this means only GET requests, however, we also use an allow/deny list of

endpoints in order to ensure we are not inadvertently manipulating production

data.

We also made sure that umbra guarantees not to replicate its own requests,

preventing an exponential increase in requests being made and an eventual self

Denial of Service.

We have rich monitoring and alerts, powered by Honeycomb. This enables to monitor our applications and the point at which they start to ‘sweat’. Without good monitoring, we would not be able to gain any benefits or insights about how our applications behave under the artificially increased load we add via shadow requesting.

What are some of the outcomes?

We recently tested a new middleware using umbra. One of our applications

receives around 50% of its traffic from internal clients. We already set

client-side timeouts on internal requests in order to mitigate against

cascading performance degradation.

When under significant load it is possible that our servers queue requests for longer than the client’s timeout period. When this occurs, those requests would usually still be processed by the server, even though the client has already ‘given up’.

We added a middleware that would drop any requests that took longer to reach

our application — from initial ingestion at the routing layer to reaching this

middleware — than the timeout set by the client (via a X-Client-Timeout header

set by all our internal HTTP clients). Using umbra we were able to test this

middleware under load safely, and the results were positive!

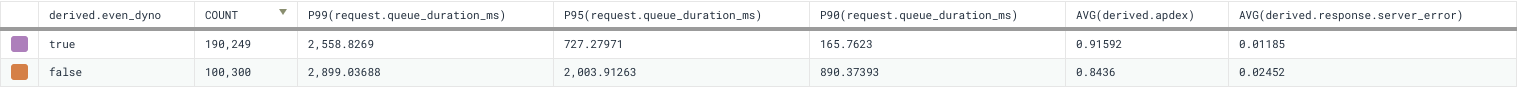

We enabled the middleware only for web processes/dynos that were evenly numbered, and only for shadow requests, allowing us to compare the performance of web requests being processed with and without the new load-shedding middleware enabled.

Results of even/odd test of load shedding on shadow-traffic only, purple lines depict those requests processed with load-shedding enabled, orange without.

The key takeaways from this test were:

- We were able to process 190% the number of requests with load-shedding enabled vs. without

- Our Apdex score was 10% with load shedding enabled P95 and P90 request queuing was significantly lower with load shedding enabled (and less significantly for P99)

- Server error rates were around 1/2 for requests being processed with load shedding enabled

The new load-shedding middleware looks to be a success!

Thanks to shadow requesting we were able to safely put our system under additional load and create the environment under which we expect this middleware to help us. This gave us the confidence in knowing that this change would help us to provide a more resilient service when our application comes under significant load. Thanks to that, we have since deployed the middleware to all of our applications.

Over the coming months, we will continue to work on testing out new ideas to

improve the performance of our applications in preparation for the projected

growth in our user base. We’ve open-sourced umbra on GitHub under the terms of

the MIT License, and published on Rubygems.com.

We will continue to use it to test new solutions and to benchmark the

performance of our applications. This will help us to identify where there are

improvements to be made and enable us to give more accurate and better-informed

assessments of the capabilities of our applications and surrounding

infrastructure. umbra is now another tool we can use to ensure that carwow is

able to deliver the best possible car buying experience to our growing user

base.

Perhaps shadow requesting is something that your teams can look into in order to build confidence in the performance of your applications. If you are running a Rack-based application (such as Rails) then maybe umbra is a good fit for your team too!

This post was originally published on “carwow Product, Design & Engineering” on Medium (source)