🗂 Speeding up our Rails apps with asynchronous cache writes

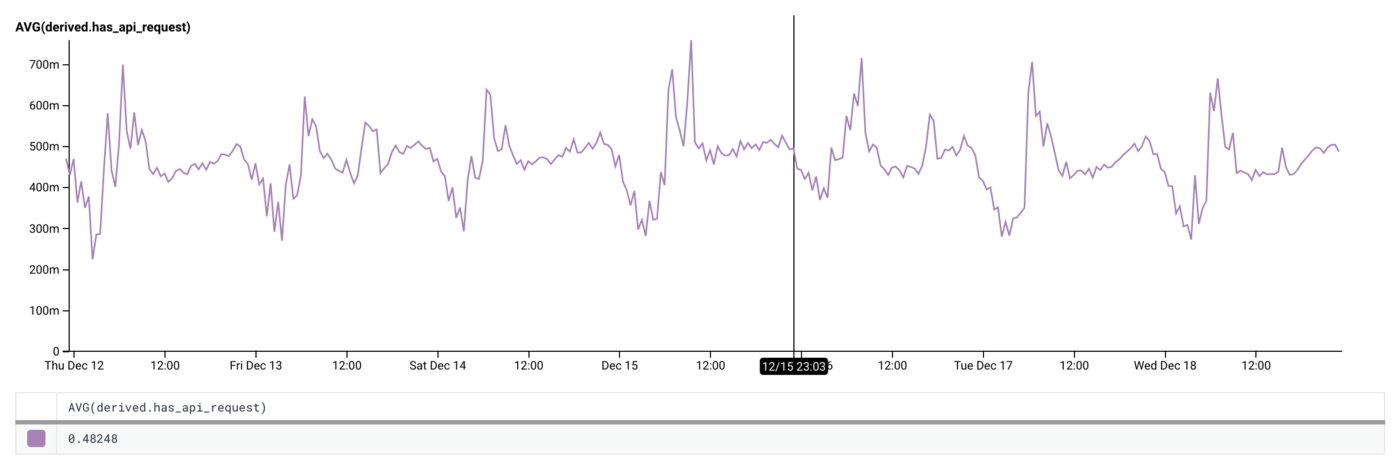

At carwow our applications can be chatty, making one or several API calls to each other in order to fulfil a request. In fact, around 48% of all user-facing requests in the last 7 days made at least one API request to another one of our applications before responding.

Average % of user-facing requests making at least 1 (internal) API call over a 7 day period (48%)

One of the tools we use to reduce the strain we put between our applications is

caching. We cache both at the client-side (using Rails.cache), as well as on

the server-side (by putting Fastly ‘in front’ of our web servers). This allows

us to reduce network overhead on the client-side as well as reduce the load on

the server-side.

Around 73% of our requests end up being served from the client-side

Rails.cache and while investigating some particularly slow endpoints I found

myself unable to account for ten-to-a-hundred-ish milliseconds of processing

time. Using honeycomb to investigate a couple of specific traces I could see

our applications spending some amount of time between getting a response and

continuing on with execution, I just didn’t know what it was spending it on! As

it turns out, there are two main things we do via middleware on our HTTP

Clients: caching and parsing. I’m going to focus on the caching part in this

post, but the parsing part had just as much to do with these unaccounted

milliseconds (perhaps more on that in a separate blog post 😉).

Something I’ve learned to do during optimisation work is to think of all the changes we intend to make as experiments, and that means taking a lot of measurements. It’s only by measuring that I can have confidence that the changes I made had the intended impact. So how can we measure the impact of cache writes?

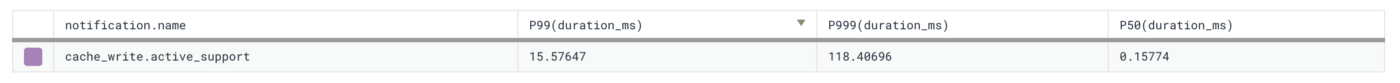

By making use of the cache_write.active_support notification and pushing the

results of that to honeycomb, I was able to get a view of how long we usually

spend on caching.

It turns out that actually it wasn’t awful. As the figure below shows, the P99 was around 15ms, but wouldn’t it be nice if that was closer to 0ms?

Time taken for cache writes

We shouldn’t be holding up any requests in order to update our cache, it should be best-effort and eventually consistent.

In order to facilitate this behaviour, we built

ActiveSupport::Cache::AsyncWriteStore which wraps an existing cache-store

implementation and overrides its #write method to push its work to a

thread-queue instead of executing synchronously.

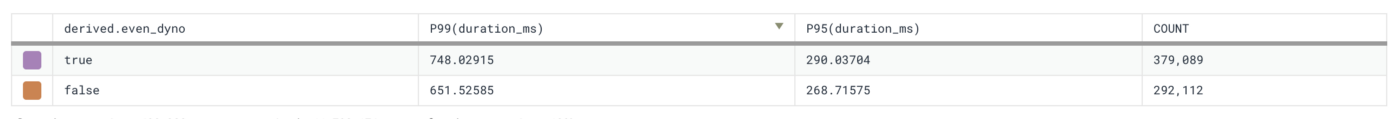

To test the effectiveness of this change we rolled it out to 50% of our application servers and had a look at overall performance impact on web requests. We saw some pretty good gains!

Results of an even/odd test, odd dynos (false / orange) had asynchronous cache-writes enabled via ActiveSupport::Cache::AsyncWriteStore

Overall P99 was ~100ms faster and P95 ~20ms. Every little bit helps!

Writing to cache asynchronously may be a nice and relatively easy way to chop off some of the overhead of caching while still maintaining the benefits!

One thing to take into account is that this adds another pool of threads for your Ruby process to manage, which may have an adverse effect on overall performance as ruby still needs to do those writes as some point. Effectiveness might vary based on specific use-cases for your application!

This post was originally published on “carwow Product, Design & Engineering” on Medium (source)